.innaitt()

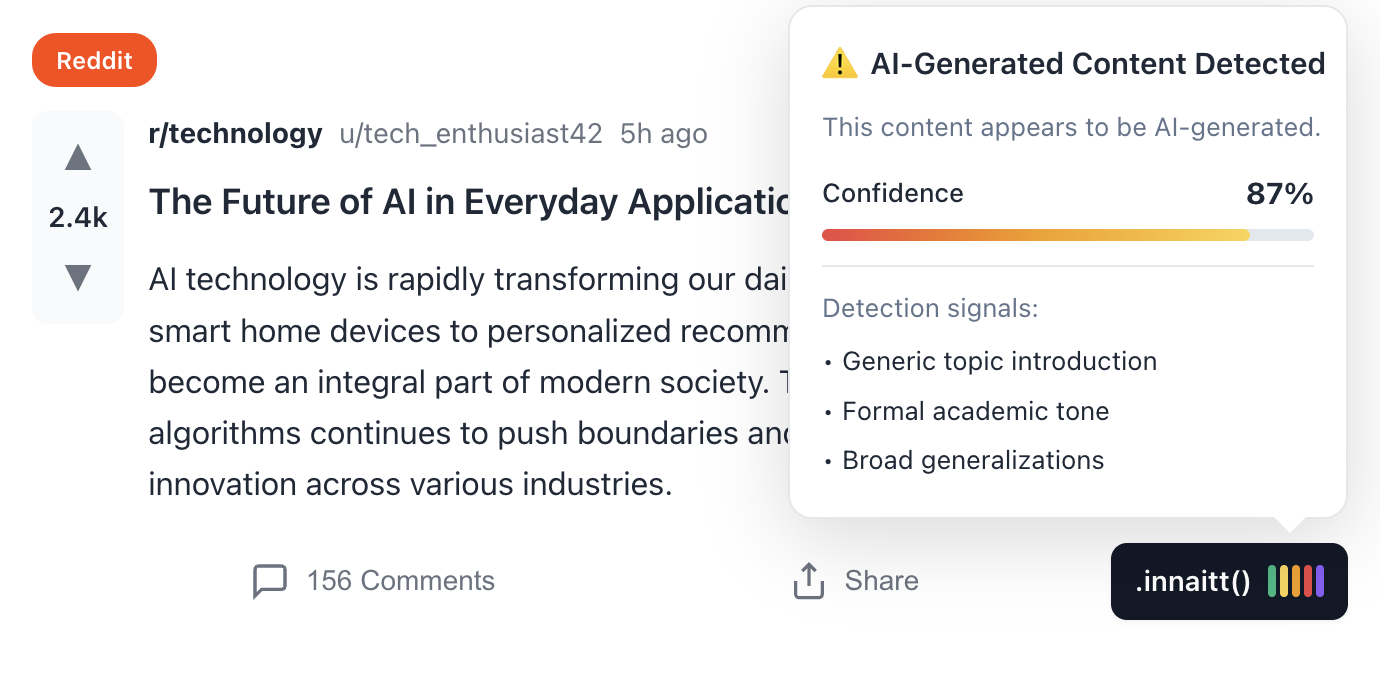

On-page indicators for AI-generated content across LinkedIn, Reddit, X, and more. Coming soon as an extension on your favorite browser.

Our Manifesto

We live in a moment where AI-generated content is fast becoming the default. As it spreads across the internet (and into our daily lives) we can't (and shouldn't) look away. We believe: (1) AI itself isn't inherently good or bad and (2) the responsibility rests with us, the humans who can benefit from it, to apply critical thinking when using it.

As AI amplifies human creativity, our shared goal should be to foster new trust mechanisms designed for the human-AI duo, ensuring transparency and accountability for everyone. That's why we're taking a proactive stance: making AI authorship transparent.

There are several actors building a trustworthy future for AI authorship:

1. Human creators hold the power of the context that shapes the meaning of their creation, even if that's a simple social media post. We believe human creators should embrace transparency as a badge of integrity, openly sharing when and how AI contributed to their work. This strengthens the bond of trust with their audiences and leads the cultural shift toward responsible co-creation.

2. Human users, as consumers of content (of any kind, not just social media), must cultivate awareness, question what they see, and reward honesty. Trust is forged from engaging with AI consciously, knowing the difference between automation and authorship, between assistance and manipulation.

3. Businesses of every size can normalize ethical practice. Whether through transparent AI policies, content provenance standards, or incentives for disclosure, companies can set the tone for a marketplace where innovation and integrity reinforce one another. Businesses that lead with openness will build the most enduring relationships.

4. Platforms, because we see them as the connective tissue of our digital life, shaping what we see and believe, should embed transparency into their infrastructure as a core design principle. By encouraging AI authorship disclosure, they can transform engagement into informed participation.

We begin by signaling social media posts that are likely AI-generated or AI-assisted, giving people immediate context for what they're seeing. Our soon-to-launch browser extension displays confidence levels and the rationale behind each label, using a clear spectrum:

1 = AI, 2 = Mostly AI, 3 = Human-AI, 4 = Mostly Human, 5 = Human.

We're prioritizing human curation wherever possible, working with verified moderators and enabling users to mark posts accordingly. At the same time, creators (individuals and organizations) receive credit for disclosure, including a trust badge recognizing their commitment to ethical AI authorship transparency.

AI is part of our future. Let's use it to elevate our humanity, starting with the simplest, most empowering step: helping people more easily recognize AI-generated (and masked) content.

Sign the manifesto and join the early access program.